Home

An example of using (Un)Perplexed Spready in a real life use case - Furniture catalog taxonomy classification

- Details

- Written by Super User

- Category: (Un)Perplexed Spready

Introduction

(Un)Perplexed Spready is an AI-driven spreadsheet software designed with purpose to utilize AI models for useful work in spreadsheets.Typical examples of such usage are: extracting multiple attributes from a column with unstructired textual description, naming standardization, addressess reformatting and standardization, products categorization and classification.

in this article, we are going to demonstrate how (Un)Perplexed Spready can be used in a real business use case of products categorization according to a predefined alternative taxonomy list, where artificial intelligence can combine taxonomy list lookup referencing with advanced reasoning and with deep internet research.

For this exercise we have used glm-4.7 LLM model provided by Ollama Cloud and matasoft_web_search / matasoft_web_reader tools in (Un)Perplexed Spready.

We will also use this opportunity to demonstrate how to properly reference multicellular ranges from another sheets, using "RANGE:" instruction, which is a syntax specific for (Un)Perplexed Spready software.

Task Description

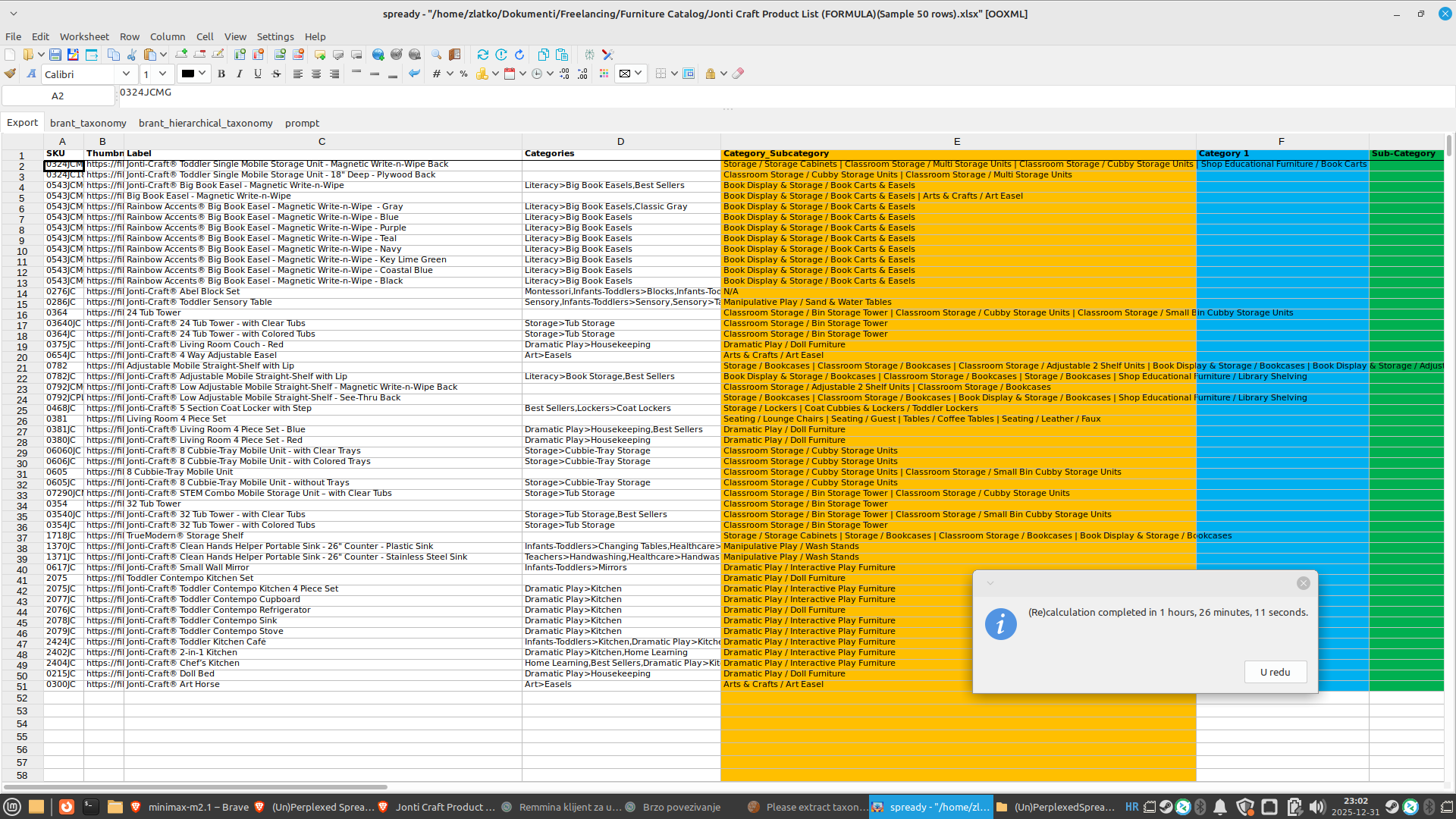

We have a dataset containing 2751 records of furniture related products being already internally categorized with a hierarchical taxonomy.

The task is to map the products into another alternative taxonomy, being provided in another lookup sheet:

For the sake of this presentation, we are going to use sample subset of 50 rows of the master dataset.

Our Tool - (Un)Perplexed Spready

(Un)Perplexed Spready is an advanced spreadsheet software developed by Matasoft, designed to bring artificial intelligence directly into everyday spreadsheet tasks. Unlike traditional tools like Excel or Google Sheets, it integrates powerful AI language models into spreadsheet formulas, enabling automation of complex data processing tasks such as extraction, classification, annotation, and categorization.

Key features include:

- AI-embedded formulas: Users can utilize advanced language models directly in spreadsheet cells to perform data analysis, generate insights, and automate repetitive tasks.

Multiple AI integration options:

PERPLEXITY functions connect to commercial-grade AI via Perplexity AI’s API, offering high-quality, context-aware responses.

ASK_LOCAL functions allow users to run free, private AI models (like Mistral, DeepSeek, Llama2, Gemma3, OLMo2 etc.) locally through the Ollama platform, providing privacy and eliminating API fees.

ASK_REMOTE functions enable access to remotely hosted AI models, mainly for demo and testing purposes. - User-friendly interface: The software maintains familiar spreadsheet features such as cell formatting, copy/paste, and advanced sorting, while adding innovative AI-driven interactivity.

- Automated data management: Tasks like extracting information from unstructured data, converting units, standardizing formats, and categorizing entries can be handled automatically by the AI, reducing manual effort and errors.

- Flexible for all users: It is suitable for both beginners and advanced users, offering significant time savings and improved accuracy in data processing.

- By embedding AI directly into spreadsheet workflows, (Un)Perplexed Spready transforms the way users interact with data, allowing them to focus on creative and strategic work while the AI manages the labor-intensive aspects of spreadsheet management.

Perplexity AI

Perplexity AI is an advanced AI-powered search engine and conversational assistant that provides direct, well-organized answers instead of just links. It uses real-time web search and large language models to quickly synthesize information and present it in a clear, conversational format.

Key features include conversational search, real-time information gathering, source transparency, and support for text, images, and code. It’s accessible via web, mobile apps, and browser extensions, and is designed to be user-friendly and ad-free.

Perplexity AI is used for research, content creation, data analysis, and more, helping users find accurate information efficiently and interactively.

Ollama and Ollama Cloud

Ollama is a tool that lets you download and run large language models (LLMs) entirely on your own computer—no cloud account or API key required. You install it once, then pull whichever open-source model you want (Llama 3, Mistral, Gemma, Granite …) and talk to it from the command line or from your own scripts. Think of it as a lightweight, self-contained “Docker for LLMs”: it bundles the model weights, runtime, and a simple API server into one binary so you can spin up a private, local AI assistant with a single command.

You can find more information here: https://ollama.com/

Recently, Ollama introduced a new cloud-hosted offering featuring some of the largest and most capable open-source AI models. While Ollama’s local setup has always been excellent for privacy and control, running large language models (LLMs) locally requires modern, high-performance hardware — something most standard office computers simply lack. As a result, using (Un)Perplexed Spready with locally installed models for large or complex spreadsheet operations can quickly become impractical due to hardware limitations.

This is where Ollama Cloud steps in. It provides scalable access to powerful open-source models hosted in the cloud, delivering the same flexibility without demanding high-end hardware. The pricing is impressively low — a fraction of what comparable commercial APIs like Perplexity AI typically cost. This makes it an ideal solution for users and organizations who want high-quality AI performance without significant infrastructure investment.

We’re genuinely excited about this development — Ollama Cloud bridges the gap between performance, affordability, and accessibility. Well done, Ollama — and thank you for making advanced AI available to everyone!

You can find more information here: https://ollama.com/cloud

SearXNG

SearXNG is a free, open-source “meta-search” engine that you can run yourself. Instead of crawling the web on its own, it forwards your query to dozens of public search services (Google, Bing, DuckDuckGo, Mojeek, Qwant etc.), merges the results, strips out trackers and ads, and returns a single clean page. All queries originate from your server/PC, so the upstream engines never see your IP or set cookies on your browser. In short: it’s a privacy-respecting, self-hostable proxy that turns multiple third-party search engines into one, without logging or profiling users.

Open WebUI

Open WebUI (formerly “Ollama WebUI”) is a browser-based, self-hosted dashboard that gives you a ChatGPT-like interface for any LLM you run locally or remotely.

It speaks the same API that Ollama exposes, so you just point it at `http://localhost:11434` (or any other OpenAI-compatible endpoint) and instantly get a full-featured GUI: threaded chats, markdown, code highlighting, LaTeX, image uploads, document ingestion (RAG), multi-model support, user log-ins, and role-based access.

Think of it as “a private ChatGPT in a Docker container” – you keep your models and data on your own machine, but your users interact through a polished web front-end.

Matasoft Web Reader and Matasoft Web Search Tools

Matasoft Web Search and Matasoft Web Reader are free tools provided for Open WebUI by Matasoft. These two tools combine static/dynamic page reading (BeautifulSoup + Selenium). By default they are trying to read web pages using fast method with BeautifulSoup, but if it fails, then the tool will utilize slow method with Selenium + Chrome where JavaScript or SPA content needs rendering. JS need is detected automatically (4-layer heuristics: block patterns, SPA frameworks, text volume, structure). This means you get real text instead of empty shells or JavaScript templates.

Matasoft Web Search tool is utilizing locally installed SearXNG meta search engine.

Matasoft Web Search (matasoft_web_search) tool can be downloaded here: https://openwebui.com/posts/6e255cfc-f187-40d0-9417-3e1ca1d3c9e5

Matasoft Web Reader (matasoft_web_reader) tool can be downloaded here: https://openwebui.com/posts/a7880f57-247d-4883-a250-2dd54e2e6dff

Methodology and AI-driven Formula Used

The full dataset contains 2751 records, but for the sake of this presentation, we are going to calculate only first 50 records.

Since our LLM prompt to be embedded into a spreadsheet formula is pretty long, we are going to put it into a textual cell in a separate sheet called "prompt".

The prompt text is the following: "Input1 contains a product name, Input2 contains internal hiearchical taxonomical category, while Input3 contains alternative full 2-level hiearachical taxonomy to which you need to map the product. Your task is to identify and return applicable categories for the product, from the Input3 taxonomy list. There could be multiple appropriate taxonomy categories to be assigned from the taxonomy list given in Input3. Return all applicable taxonomy categories from the Input3. If multiple categories are applicable, return them separated by ' | ' separator. be concise, return only categories as defined in the Input3 taxonomy list (as is), without any modification. Return only mapped taxonomical categories, without any additional comments or explanations, not citations marks.

For determining appropriate categories from Input3, you should primarily utilize information given for existing internal categorization, given in Input2. However, if Input2 is blank or unclear, then you should perform product research (Amazon / Staples) to determine appropriate taxonomy category.".

The lookup product taxonomy list, to which we need to map each product, is placed in a separate sheet called "brant_hierarchical_taxonomy":

We are going to use column E in which we have full 2-level taxonomy joined with " / " separator.

Now we have all elements to construct our AI driven formula in the following way:

=ASK_LOCAL3(C2,D2, "RANGE:brant_hierarchical_taxonomy!$E$2:$E$130", prompt!$A$1)

Let's break-down and explain elements of the formula.

"=ASK_LOCAL3" formula part instructs (Un)Perplexed Spready to call Ollama model currently being set, with 3 input ranges to be defined as first 3 arguments, while the 4th argument of the formula needs to be an LLM prompt text.

We have set Ollama Cloud model, exposed via Open WebUI endpoint, with glm-4.7 LLM model and two Open WebUI tools: matasoft_web_reader and matasoft_web_search available to AI.

At the moment of writing this article, GLM-4.7 is the best open-source LLM model in the world, surpassing kimi-k2:1t on benchmarks.

The 3 input ranges are defined in formula in these parts: (C2,D2,"RANGE:brant_hierarchical_taxonomy!$E$2:$E$130",

First two arguments "C2,D2," refer to the cells in the master dataset sheet, which are column "Label" (name of the product) and column "Categories" containing current internal taxonomy classification.

The third argument "RANGE:brant_hierarchical_taxonomy!$E$2:$E$130" refers to the lookup taxonomy we need to map to, and requires more thorough explanation, because it is a syntax specific for (Un)Perplexed Spready. This part provides textual representation of the multicellular range in another sheet, in this concrete case, it is a multicellular range in column E of the brant_hierarchical_taxonomy sheet. Please note that the expression must be defined as a text, therefore it must be embedded in double quotes, must have "RANGE:" instruction on the beginning and must use "!" symbol after name of the sheet. This is a convention which must be followed in (Un)Perplexed Spready when referring multicellular ranges from another sheet. For a single cell range from another sheet or multicellular ranges from the current sheet you can use standard notation, but this specific textual range representation is required only for multicellular ranges referenced from another sheet.

Finally, the 4th parameter "prompt!$A$1" contains the range of the cell in another sheet containing actual LLM prompt. Notice that since it is a single cell range from another sheet, we don't need to use hack with textual representation of the range ("RANGE:" instruction explained before).

For reminder, the referenced text of the prompt is the following: "Input1 contains a product name, Input2 contains internal hiearchical taxonomical category, while Input3 contains alternative full 2-level hiearachical taxonomy to which you need to map the product. Your task is to identify and return applicable categories for the product, from the Input3 taxonomy list. There could be multiple appropriate taxonomy categories to be assigned from the taxonomy list given in Input3. Return all applicable taxonomy categories from the Input3. If multiple categories are applicable, return them separated by ' | ' separator. be concise, return only categories as defined in the Input3 taxonomy list (as is), without any modification. Return only mapped taxonomical categories, without any additional comments or explanations, not citations marks.

For determining appropriate categories from Input3, you should primarily utilize information given for existing internal categorization, given in Input2. However, if Input2 is blank or unclear, then you should perform product research (Amazon / Staples) to determine appropriate taxonomy category.".

Notice that our prompt contains some typos, but it didn't prevent GLM-4.7 LLM model to correctly interpret and calculate the formula.... This is beauty of current state of advanced LLM models, which are able to understand instruction and context even if there are typos and other minor mistakes.

Our spreadsheet is now ready to be executed.

We will trigger AI-driven formulas calculation by using "Recalculate Formulas" button.

Those commands are also present in the toolbar.

Command "Recalculate Formulas" will trigger calculation of the whole spreadsheet.

Results

Since we also included web search with deep internet research, it required considerable time to perform the calculation. Without matasoft_web_search tool and prompt instructing web search it would be much, much faster. For his particular task we could have used simpler approach without web search, the names of the products would probably be sufficient information for the LLM to understand how to classify products, but we deliberatly wanted to demonstrate how web search can be combined with advanced reasoning, to provide superb results.

As you can see from the screenshot, the GLM-4.7 model was able to provide precise detailed mapping with the alternative 2-level taxonomy hierarchy, even to map with multiple applicable categories, as instructed by the prompt.

We exported the raw result into a csv file, using "Export as" option.

Using OpenRefine software we then further processed the csv file to transpose multiple mapped categories into separate rows and got the final result of mapping.

Conslusion

(Un)Perplexed Spready is a perfect tool for data extraction and data categorization tasks in textual spreadsheets. It can combine the most advanced LLM models (such as GLM-4.7, kimi-k2:1t or deepseek-3.2) with deep web research (e.g. utilizing Matasoft Web Search tool) with long context lookup taxonomy list, in order to provide accurate mapping and classification. We demonstrated this on particular real-life example of mapping a list of products to alternative lookup taxonomy.

As always, our moto is: let AI do the heavy job, while you drink your coffee! AI should be a tool which frees humans from tedious repetitive job, so that human intelect can have more time for creative work. And leisure too!

Why Choose (Un)Perplexed Spready?

-

Revolutionary Integration: Get the best of spreadsheets and AI combined, all in one intuitive interface.

-

Market-Leading Flexibility: Choose between Perplexity AI and locally installed LLM models, or even our remote free model hosted on our server—whichever suits your needs.

-

User-Centric Design: With familiar spreadsheet features seamlessly merged with AI capabilities, your productivity is bound to soar.

-

It's powerful - only your imagination and HW specs are limits to what can you do with the help of AI! If data is new gold, then the (Un)Perplexed Spready is your miner!

- It's fun - sure it is fun, when you are drinking coffee and scrolling news, while AI is doing the hard job. Drink your coffee and let the AI works for you!

Get Started!

Join the revolution today. Let (Un)Perplexed Spready free you from manual data crunching and unlock the full potential of AI—right inside your spreadsheet. Whether you're a business analyst, a researcher, or just an enthusiast, our powerful integration will change the way you work with data.

You can find more practical information on how to setup and use the (Un)Perplexed Spready software here: Using (Un)Perplexed Spready

Download

Download the (Un)Perplexed Spready software: Download (Un)Perplexed Spready

Request Free Evaluation Period

When you run the application, you will be presented with the About form, where you will find automatically generated Machine Code for your computer. Send us an email with specifying your machine code and ask for a trial license. We will send you trial license key, that will unlock the premium AI functions for a limited time period.

Contact us on following email:

Sales Contact

Purchase Commercial License

For a price of two beers a month, you can have a faithful co-worker, that is, the AI-whispering spreadsheet software, to work the hard job, while you drink your coffee!.

You can purchase the commercial license here: Purchase License for (Un)Perplexed Spready

AI-driven Spreadsheet Processing Services

We are also offering AI-driven spreadsheet processing services with (Un)Perplexed Spready software.

If you need massive data extraction, data categorization, data classification, data annotation or data labeling, check-out our corresponding services here: AI-driven Spreadsheet Processing Services

Further Reading

Download (Un)Perplexed Spready

Purchase License for (Un)Perplexed Spready

Leveraging AI on Low-Spec Computers: A Guide to Ollama Models for (Un)Perplexed Spready

(Un)Perplexed Spready with web search enabled Ollama models

- Details

- Written by Super User

- Category: (Un)Perplexed Spready

Introduction

At Matasoft, we believe that technology exists to amplify human creativity, not to burden it with repetitive and tedious tasks. Yet, countless professionals still spend hours manually entering and editing complex spreadsheets filled with unstructured text — a type of data traditional spreadsheet formulas simply cannot process effectively.

Until recently, the only solution was painstaking manual work, row by row. That has changed with the rise of large language models (LLMs), which bring contextual understanding and intelligent automation into the realm of data processing.

To harness this advancement, we developed (Un)Perplexed Spready — software that seamlessly integrates artificial intelligence into spreadsheet formulas. It enables machines to extract, analyze, and categorize messy data effortlessly, allowing users to focus on insights rather than data cleanup.

Our philosophy is simple: let AI do the hard work while you enjoy your coffee.

About (Un)Perplexed Spready

Our (Un)Perplexed Spready application is a next-generation spreadsheet tool that embeds AI prompts directly inside spreadsheet formulas, enabling true intelligent data processing powered by artificial intelligence. You can find detailed information on our official page: https://matasoft.hr/QTrendControl/index.php/un-perplexed-spready.

At present, the software supports two types of AI integrations: Perplexity AI – a highly capable web search–oriented commercial AI, and Ollama – a local platform hosting a variety of open-source large language models you can run directly on your own machine.

While Perplexity AI offers excellent contextual accuracy and real-time web data retrieval, its pricing structure makes it less accessible for small businesses or individual users, as token usage costs can quickly become significant. For this reason, this article focuses on a more affordable alternative: the Ollama-based setup, which provides local AI processing at minimal or zero cost, making advanced spreadsheet automation achievable for everyone.

About Ollama

Ollama is a tool that lets you download and run large language models (LLMs) entirely on your own computer—no cloud account or API key required. You install it once, then pull whichever open-source model you want (Llama 3, Mistral, Gemma, Granite …) and talk to it from the command line or from your own scripts. Think of it as a lightweight, self-contained “Docker for LLMs”: it bundles the model weights, runtime, and a simple API server into one binary so you can spin up a private, local AI assistant with a single command.

You can find more information here: https://ollama.com/

By default, Ollama will expose API endpoint on http://localhost:11434/api/chat

About Ollama Cloud

Recently, Ollama introduced a new cloud-hosted offering featuring some of the largest and most capable open-source AI models. While Ollama’s local setup has always been excellent for privacy and control, running large language models (LLMs) locally requires modern, high-performance hardware — something most standard office computers simply lack. As a result, using (Un)Perplexed Spready with locally installed models for large or complex spreadsheet operations can quickly become impractical due to hardware limitations.

This is where Ollama Cloud steps in. It provides scalable access to powerful open-source models hosted in the cloud, delivering the same flexibility without demanding high-end hardware. The pricing is impressively low — a fraction of what comparable commercial APIs like Perplexity AI typically cost. This makes it an ideal solution for users and organizations who want high-quality AI performance without significant infrastructure investment.

We’re genuinely excited about this development — Ollama Cloud bridges the gap between performance, affordability, and accessibility. Well done, Ollama — and thank you for making advanced AI available to everyone!

You can find more information here: https://ollama.com/cloud

Ollama cloud API endpoint is: https://ollama.com/api/chat

About Ollama Desktop Application

Ollama recently also published a desktop application, which introduces interesting capabilities like integration with web search tool.

Unfortunately, currently it is available only for Windows and Mac, while not available for Linux. We hope this will change in future. We haven't checked, but maybe it is possible to install it in Linux via Wine emulator?

About SearXNG

SearXNG is a free, open-source “meta-search” engine that you can run yourself. Instead of crawling the web on its own, it forwards your query to dozens of public search services (Google, Bing, DuckDuckGo, Mojeek, Qwant etc.), merges the results, strips out trackers and ads, and returns a single clean page. All queries originate from your server/PC, so the upstream engines never see your IP or set cookies on your browser. In short: it’s a privacy-respecting, self-hostable proxy that turns multiple third-party search engines into one, without logging or profiling users.

Unfortunatelly, while On Linux it is quite easy to install it, on Windows it is not so easy, and depending on Windows OS version, it might be impossible.

About Open WebUI

Open WebUI (formerly “Ollama WebUI”) is a browser-based, self-hosted dashboard that gives you a ChatGPT-like interface for any LLM you run locally or remotely.

It speaks the same API that Ollama exposes, so you just point it at `http://localhost:11434` (or any other OpenAI-compatible endpoint) and instantly get a full-featured GUI: threaded chats, markdown, code highlighting, LaTeX, image uploads, document ingestion (RAG), multi-model support, user log-ins, and role-based access.

Think of it as “a private ChatGPT in a Docker container” – you keep your models and data on your own machine, but your users interact through a polished web front-end.

What is interesting is that it enables integration of tools like web search tools and exposing it externally via API endpoint on 'http://localhost:3000/api/chat/completions'.

Thus, instead of using Ollama endpoint 'http://localhost:11434/api/chat' or 'https://ollama.com/api/chat' we will rather use 'http://localhost:3000/api/chat/completions' with integrated SearXNG enabled "web_search" tool. What is great about it is that we can utilize both local Ollama models and Ollama cloud models by using the same Open WebUI API endpoint ('http://localhost:3000/api/chat/completions' ).

Working Solution on Linux

Ollama Setup

Install Ollama by using follwing command in terminal:

curl -fsSL https://ollama.com/install.sh | sh

Search and browse local and cloud models available here: https://ollama.com/search

Download and install local LLM models by using "pull" command, like this:

ollama pull granite4:tiny-h

Register and sign-in to Ollama here: https://ollama.com/cloud in order to be able to use Ollama cloud models. Optionally (and highly recommended), upgrade to PRO subscription.

Register also API key here: https://ollama.com/settings/keys

The you can register cloud models by using commands like this:

ollama pull gpt-oss:20b-cloud

Notice the difference between actually installing local copy of a model from registering cloud variant of the same model.

This registration of a cloud model is needed for running a cloud model via CLI in terminal (for example "ollama run gpt-oss:120b-cloud") or inside Ollama Desktop application.

However, when you are utilizing cloud model via API, then this is not needed, but rather, you will use the model via API endpoint on https://ollama.com/api/chat

Read the API related documents here:

Configure Ollama Service

Go to the folder /etc/systemd/system and open ollama.service file as sudo. Add these 2 lines:

Environment="OLLAMA_HOST=0.0.0.0:11434"

Environment="OLLAMA_ORIGINS=*"

This will enable proper integration of locally installed Ollama with Open WebUI installed in a docker container.

SearXNG Setup

There are multiple alternative ways how to install and setup SearXNG, but I would recommend approach with Docker installation described here: https://docs.searxng.org/admin/installation-docker.html.

However, the web page is not giving exactly accurate instruction, so we need to modify it a bit.

Create Directories for Configuration and Persistent Data

In terminal type:

mkdir -p ./searxng/config/ ./searxng/data/

cd ./searxng/

Run the Docker Container

Type in terminal:

docker rm -f searxng

docker run --name searxng -d -p 8080:8080 -v "./config/:/etc/searxng/" -v "./data/:/var/cache/searxng/" --add-host=host.docker.internal:host-gateway docker.io/searxng/searxng:latest

Edit SearxNG Configuration for JSON Support

Find the settings.yml configuration file and enter a line to support json format. This is absolutely critical, without that integration with SearXNG will not work! (Somebody please tell SerXNG developer to include JSON support by default!)

In the "search" section under "formats:" subsection, add "-json" line.

Starting SearXNG

You can start SearXNG with this command in terminal:

docker container start

The SearXNG should be visible on http://localhost:8080/

Verify that JSON format is enabled, by loading the following page in browser: http://localhost:8080/search?q=%3Cquery%3E&format=json

You should see the following:

Starting SearXNG as a Service

In order to ensure automatic starting of SearXNG during OS booting, create a file named "searxng-start.service" inside folder /etc/systemd/system.

Enter the following lines inside the file:

[Unit]

Description=Start SearxNG Docker Container

After=docker.service

Requires=docker.service

[Service]

Type=oneshot

RemainAfterExit=yes

ExecStart=/usr/bin/docker container start searxng

TimeoutStartSec=0

[Install]

WantedBy=multi-user.target

Open WebUI Setup

Open WebUI is one of those powerful, feature-rich tools that can both impress and infuriate at the same time. It offers an incredible range of capabilities — but finding where each option lives can feel like navigating a labyrinth designed by a mischievous engineer. Before you figure out where everything is, you’ll probably be pulling your hair and questioning your life choices. (Seriously, guys… why like this?!)

But don’t worry — you don’t have to suffer through that chaos alone. To save you the frustration and time, we’ve prepared clear, step-by-step instructions on how to properly set up and configure Open WebUI the right way.

You can install Open WebUI by following instructions given here: https://github.com/open-webui/open-webui

As with SearXNG, there are multiple options how to install Open WebUI, but we recommend installation via Docker, by entering following command into terminal:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

Open WebUI is subject of intensive development, so new releases are very frequent. You can update your installation by following command:

docker run --rm --volume /var/run/docker.sock:/var/run/docker.sock containrrr/watchtower --run-once open-webui

Setting Ollama Endpoint in Open WebUI

You can setup multiple "Ollama API connections" here: http://127.0.0.1:3000/admin/settings/connections

Use "http:host.docker.internal:11434" to point towards locally installed Ollama instance, while "https://ollama.com" to point towards Ollama cloud instance.

IMPORTANT: When setting up Ollama cloud connection, you need to enter you Ollama cloud API key under "Bearer"!

Then go to http://127.0.0.1:3000/admin/settings/models and open "Menage Models" options, in order to fetch models available on each of those Ollama instances:

Notice that here you can fetch models coming from:

A) Local Ollama installation

Note that it is "http//host.docker.internal:11434" that points to locally installed Ollama on your computer, not the "http://127.0.0.1:11434", which would be used if you install Ollama instance inside the same docker container where is OpenWebUI! This is important to understand, use "http://host.docker.internal:114343" if you want to utilize Ollama models being installed in standard Ollama installation on your computer.

From here you can pull all the Ollama models that you have already installed on your computer.

Here you can see and edit details about locally installed models, for example locally installed granite4:tiny-h model:

B) Ollama cloud

By defining 'https://ollama.com' you can access Ollama cloud models.

For example, kimi-k2:1t model available in Ollama cloud:

Setting SearXNG Web Search Tools in Open WebUI

We need to setup separately Web Search tool being integrated in Open WebUI GUI application and web_search tool being utilized via API.

Setting SearXNG Web Search Tool for Open WebUI GUI

Go to http://127.0.0.1:3000/admin/settings/web

Here we need to enable web search and choose searxng search engine.

In the "Searxng Query URL" enter the following address: http://host.docker.internal:8080/search?q=<query>&format=json

(Notice that required format is JSON, that's why we had to enable JSON format in settings.yml configuration file of SearXNG installation)

Setting SearXNG Web Search Tool for Open WebUI API

Go to http://127.0.0.1:3000/workspace/tools

Here we can setup various tools, but we will focus on "web_search" tool only.

You can find and download various Open WebUI tools here: https://openwebui.com/#open-webui-community or here https://openwebui.com/tools

You can find web_search tool utilizing SearXNG here: https://openwebui.com/t/constliakos/web_search

Alternatively, you can download it also from here: https://matasoft.hr/tool-web_search-export-1760708746640.json.7z

You can use Import button to import a tool into your Open WebUI instance:

At then end, you will have it available here: http://127.0.0.1:3000/workspace/tools/edit?id=web_search

Important thing is that you need to configure the SEARXNG_ENGINE_API_BASE_URL with proper address, by setting default="http://host.docker.internal:8080/search" and not default="http://localhost:8080/search"!

class Tools:

class Valves(BaseModel):

SEARXNG_ENGINE_API_BASE_URL: str = Field(

default="http://host.docker.internal:8080/search",

description="The base URL for Search Engine",

Exposing Open WebUI API Endpoint

In order to be able to use Ollama models (either locally installed, either coming from Ollama cloud) with connected SearXNG web search tool, we need to setup and expose Open WebUI API endpoint.

Go to http://127.0.0.1:3000/admin/settings/general.

Ensure that "Enable API key" option is enabled.

Now go to user settings (not Admin panel settings, but regular user settings).

Here you need to generate your Open WebUI API key, this is the API key you are going to use in (Un)Perplexed Spready!

Now your Open Web UI instance is configured to run with SearXNG web search tool.

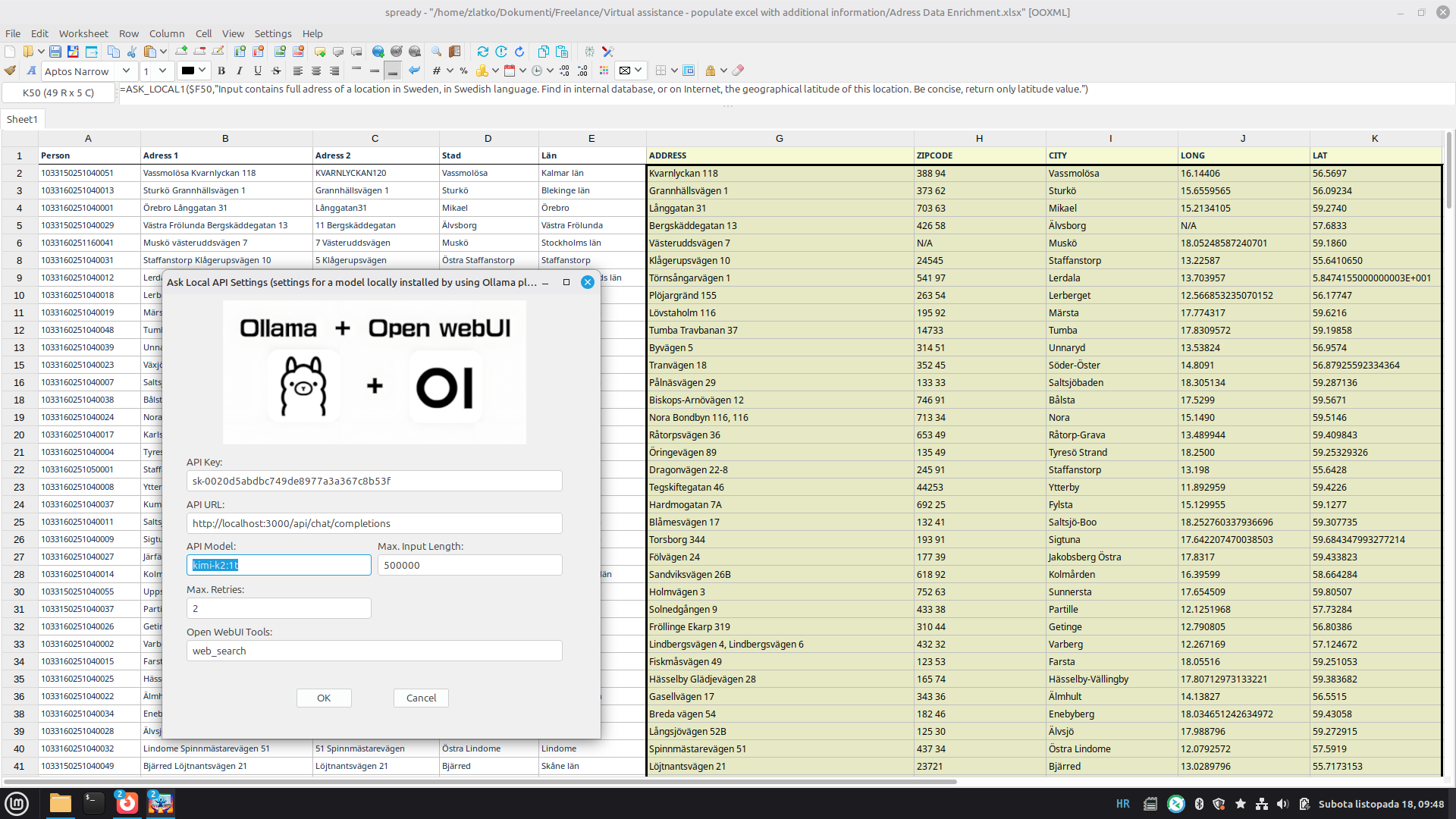

Endpoints Setup in (Un)Perplexed Spready

You can find detailed instructions on how to setup and use use (Un)Perplexed Spready here: https://matasoft.hr/qtrendcontrol/index.php/un-perplexed-spready/using-un-perplexed-spready

Go to Settings and choose "Local AI API Settings".

Also available in the toolbar:

We need to enter the Open WebUI API key under the API key, Open WebUI API URL ('http://localhost:3000/api/chat/completions') under the API URL and "web_search" under the Open WebUI Tools.

That's it! Now we can use both locally installed Ollama models and Ollama cloud models, by using the very same API endpoint. Cool, isn't it?

NOTICE: When you are entering model name under "API Model", you need to use model name as shown in your Open WebUI GUI where you registered it. Therfore, e.g. "kimi-k2:1t" and not "kimi-k2:1t-cloud", although you might think that proper name should have "-cloud" suffix, as indicated in Ollama web page. No! You need to use without "-cloud" suffix, exactly as you see it in the Open WebUI GUI.

Working Solution on Windows

While in Linux we had setup Open WebUI as an additional layer where we connected together Searxng based web_search tool with Ollama models, in Windows we have available more straight-forwards approach by using Ollama Desktop application. It seems that Ollama Desktop in Windows has already embedded a web search tool, and it is possible to expose it to external world via API, directly.

The major difference here is that we will not use Open WebUI endpoint (http://localhost:3000/api/chat/completions) neither Ollama Cloud endpoint (https://ollama.com/api/chat) but rather local Ollama endpoint: http://localhost:11434/api/chat.

Also, contrary to the approach we described for Linux, where we used model names without "-cloud" suffix, here we must use exactly those locally registered names of cloud models, so e.g. "gpt-oss:120b-cloud" instead of "gpt-oss:120b"!

First step is to locally register models, in command prompt. For example: ollama pull kimi-k2:1t-cloud, so that we can use it in the Ollama desktop application.

Then in the Ollama Desktop application, under Settings, expose Ollama to the network.

In the (Un)Perplexed Spready you need to enter you Ollama Cloud API key under "API Key", local Ollama API endpoint (http://localhost:11434/api/chat), locally registered name representing a cloud model (e.g. kimi-k2:1t-cloud) and no Open WebUI tool (keep it blank).

UPDATE: Later we have tested without entering Ollama Cloud API key, and it seems it is working even without entering it. If localhost installation API is used, it seems that both Ollama Cloud models and web search tool are enabled via integration through Ollama Desktop application.

SIDE NOTE: Here in screenshot you can see an interesting use case for (Un)Perplexed Spready, where we had to compile answers to some legal questions in Indian state Gujarat. The formula was:

=ASK_LOCAL3($Instructions.$A$1,B2,C2," Your job is to answer the legal query given in the Input3, in category given in Input2, using instructions provided in Input1. Before giving me an output of the answer, I want you to verify the correctness of your answer, cross check the answer for any discrepancies with any government or court orders and check the instructions written here, to make sure your answer is as per the requirements. Remove any reference to citations from the result text, before returning the result. Return now answer to the question given in Input3!")

As you can see, we had to reference a cell in other sheet where we put details of the legal regulations and context for the questions.

Demonstration

We will now demonstrate how it works, with a simple project where we have a spreadsheet containing around 300 addresses in Swedish language. The task is to extract address line and city, and enrich it by finding city ZIP code and geographical longitude and latitude. For sake of this experiment, we will process only first 30 rows and compare various Ollama cloud models with a locally installed Ollama model and finally with a Perplexity AI model. We ere running this test on a Dell Latitude 5410 laptop.

We will first create new column (column F) containing concatenated information =$B$1&": "&B2&", "&$C$1&": "&C2&", "&$D$1&": "&D2&", "&$E$1&": "&E2

Then we will define AI-driven formulas (ASK_LOCAL1 formula) for columns to be populated with additional information:

ADDRESS: =ask_local1($F2, "Input contains full adress of a location in Sweden, in Swedish language. Extract Street Address and House Number ONLY.")

ZIPCODE: =ask_local1($F2, "Input contains full adress of a location in Sweden, in Swedish language. Extract or find on Internet ZIP code (postal code). Be concise, return postal code value only.")

CITY: =ask_local1($F2, "Input contains full adress of a location in Sweden, in Swedish language. Extract city name.")

LONG: =ask_local1($F2, "Input contains full adress of a location in Sweden, in Swedish language. Find in internal database, or on Internet, the geographical longitude of this location. Be concise, return only longitude value.")

LAT: =ask_local1($F2, "Input contains full adress of a location in Sweden, in Swedish language. Find in internal database, or on Internet, the geographical latitude of this location. Be concise, return only latitude value.")

Now we can load and execute it in (Un)Perplexed Spready. Let's load it and hide column F. We will execute calculation for first 30 rows and compare results for Ollama cloud models with one locally installed Ollama model (we will use Smollm3). We have chosen smollm3 because it is a model that can run relatively fast on weak HW, while still achieving relatively good results. Bigger models, of course, would run even longer. The intent is not to give an extensive comparison, but rather to give you a glimpse on what is the difference between running Ollama cloud models versus locally installed models.

A) Ollama Cloud Model - kimi-k2:1t

With kimi-k2:1t model, It took 10 minutes 16 seconds to finish the job for 30 rows (30x5=150 formulas). This model is considered by many to be the most capable general purpose open-source model at the moment. You can find more info here: https://ollama.com/library/kimi-k2

B) Ollama Cloud Model - gpt-oss:120b

With gpt-oss:120b, It took 13 minutes 13 seconds to finish the job for 30 rows (30x5=150 formulas). You can find more information about this model here: https://ollama.com/library/gpt-oss

C) Ollama Cloud Model - gpt-oss:20b

With smaller variant of gpt-oss, It took 13 minutes 32 seconds to finish the job for 30 rows (30x5=150 formulas). More information about the model here: https://ollama.com/library/gpt-oss

D) Local Ollama Model - alibayram/smollm3:latest

Now, we were running the same test with a locally installed Ollama model. We have chosen smollm3 due it's compromise of small size ( therefore fast execution) and pretty good agentic capabilities. It took 3 hours 14 minutes and 55 seconds to finish the job for 30 rows (30x5=150 formulas). It means that on this particular laptop, 390 seconds (6.5 minutes) is required to calculate one row, 32.5 hours would be required for 300 rows, while 1354 days would be required for 300 K rows spreadsheet (!).

You can find more information about this model here: https://ollama.com/alibayram/smollm3

E) Perplexity AI Model - sonar-pro

Finally, let's compare it with A Perplexity AI model. We are going to use Peprlexity AI's sonar-pro model.

With perplexity AI, It took only 6 minutes 44 seconds to finish the job, for 30 rows (30x5=150 formulas). It is obviously the fastest option, but, with a price - during this testing alone, we spent 1.88 $ on tokens, meaning the price was 0.062667 $ per row. Therefore, it would be 18.80$ for 300 rows, 188$ for 3000 rows and 18800$ for 300 K rows! Consider that, almost 19 K $ for a spreadsheet with 300 K rows to process!

Conclusion

While Perplexity AI remains the most capable commercial-grade solution for AI-powered spreadsheet processing in (Un)Perplexed Spready, its usage costs can quickly add up, making it a less practical option for smaller teams or individual users.

Locally installed Ollama models provide an excellent alternative for enthusiasts and professionals equipped with powerful hardware capable of running large language models. However, for extensive or complex spreadsheet operations, performance limitations and hardware demands often become a concern.

The recently launched Ollama Cloud elegantly bridges this gap. It offers access to some of the most advanced open-source models, hosted on high-performance cloud infrastructure — at a fraction of the cost of commercial APIs like Perplexity AI. With its strong performance, flexibility, and modest pricing, Ollama Cloud emerges as the most balanced and affordable choice for AI-driven spreadsheet automation within (Un)Perplexed Spready.

We confidently recommend it to all users seeking the perfect mix of power, convenience, and cost efficiency.

Recommendations to Ollama

If somebody from Ollama reads this article, this is what we would like to see present in Ollama cloud offer in future:

- Server side enabled tools integration, primarily web search tool

- Smaller, but capable models being supported. We are considering models like: IBM's granite3.3:8b, granite4:tiny-h, granite4:small-h; smollm3 (thinking/non thinking); mistral:7b, olmo2; jan-nano-4b etc. Fast execution is important for usage inside spreadsheets

- Uncensored / abliterated variants of LLM models, capable to perform uncensored web scraping

Further Reading

Introduction to (Un)Perplexed Spready

Download (Un)Perplexed Spready

Purchase License for (Un)Perplexed Spready

Various Articles about (Un)Perplexed Spready

AI-driven Spreadsheet Processing Services

Contact

Be free to contact us with your business case and ask for our free analysis and consultancy, prior ordering our fuzzy data matching services.

Spreadsheet Processing Services with (Un)Perplexed Spready and Perplexity AI

- Details

- Written by Super User

- Category: (Un)Perplexed Spready

Spreadsheet Processing Services with (Un)Perplexed Spready and Perplexity AI

Transform Your Spreadsheet Workflows with AI-Powered Automation

At Matasoft, we bring the power of artificial intelligence to spreadsheet processing, offering unparalleled efficiency and precision. With our proprietary tool, (Un)Perplexed Spready, integrated with Perplexity AI, we specialize in automating complex spreadsheet tasks such as data extraction, categorization, annotation, and labeling. Powered by Perplexity AI’s advanced language models, our tool understands context, slang, and industry-specific terminology for precise results. Whether you're managing large datasets or need advanced text analysis, our services are designed to save you time and deliver high-quality results.

Stop drowning in data. Start driving decisions.

Our Services

1. Data Extraction

Effortlessly extract meaningful insights from your spreadsheet data:

-

Extract specific values from text-heavy cells.

-

Pull structured information from unstructured data fields.

-

Automate repetitive data extraction tasks across thousands of rows.

Example: Extract product details (e.g., color, size, price) from descriptions across 10,000 rows in under 8 hours.

2. Data Categorization

Organize your data into actionable categories with AI-driven precision:

-

Classify products or services based on attributes like price range, brand, or type.

-

Group survey responses into thematic categories for analysis.

-

Tag customer feedback by sentiment (positive/neutral/negative).

Example: Categorize 5,000 customer reviews into sentiment groups with >99% accuracy.

3. Data Annotation & Labeling

Prepare your datasets for machine learning or advanced analytics:

-

Annotate text data with tags for sentiment, topic, or intent.

-

Label spreadsheet cells for specific attributes like demographics or geography.

-

Create structured datasets from raw spreadsheet inputs.

Example: Annotate 2,000 rows of survey responses with demographic tags in just a few hours.

4. Custom Spreadsheet Solutions

Tailored solutions for unique spreadsheet challenges:

-

Automate repetitive tasks using AI-powered formulas.

-

Perform bulk transformations on large datasets (e.g., reformatting dates, cleaning text).

-

Integrate processed spreadsheets into your existing workflows (e.g., CRM systems).

Example: Automate the cleaning and standardization of 50,000 rows of customer data in a single day.

Why Choose Us?

Transparent Process

We keep you updated and tailor our approach to your specific business case.

Speed & Efficiency

Our AI-powered tool processes up to 1,500 cells per hour, making it up to 10x faster than manual data entry.

Accuracy & Consistency

With Perplexity AI integration, we achieve over 99% accuracy in data classification and extraction tasks.

Advanced AI

We are leveraging state-of-the-art language models for deep contextual understanding. Our "(Un)Perplexed Spready" tool is purpose-built for AI-driven spreadsheet tasks.

Scalability

From small projects to large-scale datasets (500K+ cells per day), our services scale effortlessly to meet your needs.

How It Works

-

Provide Your Spreadsheet: Share your file (Excel, Google Sheets, or CSV) along with specific instructions for processing. Describe your business case and what are your goals.

- Analysis and Piloting: We will design appropriate AI-driven spreadsheet formulas to accomplish desired result and present you preliminary results on a small sample.

-

AI-Powered Processing: After getting satisfactory pilot results, we will processes your full dataset, using AI-driven formulas with Perplexity AI models tailored to your task requirements.

-

Delivery: Receive your processed spreadsheet within the agreed timeframe in your preferred format.

Pricing & Packages

We offer flexible pricing based on the complexity and volume of your project:

|

Package |

Cells Processed |

Turnaround Time |

Price |

Price per one calculated cell |

|

Basic (Starter) |

Up to 1,000 Calculated Cells |

1 day |

$60 |

$0.06 |

|

Standard |

Up to 10,000 Calculated Cells |

2 days |

$500 |

$0.05 |

|

Premium (Advanced) |

Up to 100,000 Calculated Cells (and above) |

5 days |

$4,000 |

$0.04 |

Above prices are applicable for regular modest AI-driven formulas. If a formula includes very big lookup list (such as a large product categorization taxonomy list), price might be increased due to costs of tokens utilized.

For projects exceeding 100,000 calculated cells or requiring custom solutions, contact us for a tailored quote.

Use Cases

Some of the typical business use cases:

E-Commerce Businesses

-

Categorize thousands of products by attributes like product category, size, color or material.

-

Analyze customer reviews to identify trends and improve offerings.

Healthcare Providers

-

Organize patient records into structured formats for research.

-

Extract key metrics from lab result spreadsheets.

Financial Services

-

Automate invoice processing by extracting vendor names and amounts.

-

Perform risk assessments by categorizing transaction data.

Marketing & Sales

- Analyze survey data, clean and standardize customer lists

Research & Academia

- Process large datasets for analysis and publication

Why Automate Spreadsheet Processing?

Manual spreadsheet work is time-consuming and prone to errors. By leveraging AI-powered automation with (Un)Perplexed Spready and Perplexity AI:

-

Save up to 80% of processing time compared to manual methods. (Un)Perplexed Spready running your spreadsheet calculation does not faint or get tired, it can work day and night, without need to sleep.

-

Reduce errors by over 85%, ensuring consistent results across large datasets. By using same AI-driven formulas across the spreadsheet, you will get consistent results across all rows. (Un)Perplexed Spready running your spreadsheet calculation does not get distracted or bored while working, neither looses concentration.

-

Focus on decision-making rather than tedious data entry tasks. Manual data entry is time-consuming and error-prone, data entry and categorization tasks become extremely boring and tedious for large datasets. Our AI ensures consistency and frees your team for strategic tasks. Let Artificial Intelligence do the tedious job, while you are drinking your coffee and thinking about your business strategy!

What Sets Us Apart?

Unlike traditional manual processing or basic automation tools, our service combines:

-

Advanced AI Models: Powered by Perplexity AI’s state-of-the-art language models for contextual understanding of text-based data.

-

Custom-Built Tool: "(Un)Perplexed Spready" is specifically designed for efficient spreadsheet processing with AI-powered formulas.

-

Expertise in Data Handling: Years of experience in managing complex datasets ensure high-quality results.

-

Transparent Workflow: We provide regular updates on project progress and tailor approach according to your specific business case.

Get Started Today!

Ready to automate your spreadsheet tasks? Take the first step towards effortless data processing:

- Visit our Fiverr Gig: Order on Fiverr

- Explore our Upwork Project: Hire on Upwork

Contact

Be free to contact us with your business case and ask for our free analysis and consultancy, prior ordering our fuzzy data matching services.

Matasoft – Revolutionizing Spreadsheet Processing with Artificial Intelligence.

Our Fuzzy Data Matching Services

Besides AI-driven spreadsheet processing, we are also offering fuzzy data matching services.

If you need entity resolution, fuzzy data matching, de-duplication or cleansing of your datasets, you can heck-out our data matching service here: Data Matching Service

Further Reading

Introduction to (Un)Perplexed Spready

Download (Un)Perplexed Spready

Purchase License for (Un)Perplexed Spready

(Un)Perplexed Spready with web search enabled Ollama models

Various Articles about (Un)Perplexed Spready

(Un)Perplexed Spready: When Spreadsheets Finally Learn to Think

- Details

- Written by Super User

- Category: (Un)Perplexed Spready

(Un)Perplexed Spready: The AI - Powered Spreadsheet Revolution

Have you ever found yourself despairing in tedious job of extracting information from some huge, big, boring spreadsheet... thinking how nice it would be if you had somebody else to do it for you? Cursing your life, while trying to make some sense from unstructured, messy data? Snail's pace going through the rows of the table, wondering whether you will finish it before the end of universe, or you will continue the job even in the black hole over the horizon of space-time?

Yes, we know that feeling. Sometimes there is just no spreadsheet function, no formula to do the job automatically, there is no automated way of extracting useful information from a messy dataset...only human can do it, manually. Row by row, until it is done. No machine can understand the meaning of unorganized textual data, no spreadsheet formula can help you managing such data...no machine...wait!

Is that really true anymore? I mean, today, in the age when we are witnessing rising of the Artificial Intelligence everywhere? I mean, would you go around the world by foot or by plane? Would you till the land by hand or by tractor? Would you ride a horse or drive a car, to visit your mother far away?

No, my friends, there is no reason to despair anymore, there is no reason to do the hard, tedious job of manual data extraction and categorization in spreadsheets, today when we have advanced AI (Artificial Intelligence) models available. But how? How can you make that funny clever AI chat-bot to do something useful instead of babbling nonsense?

Well, let us present you a solution - the (UN)PERPLEXED SPREADY, a spreadsheet software whispering to Artificial Intelligence, freeing you from hardship of manual spreadsheet work!

Enjoy your life and drink your coffee, while the AI is working for you! It was time, after all, don't you think you deserve it?

Harness the power of advanced language models (LLM) directly within your spreadsheets!

Imagine opening your favorite spreadsheet and having the power of cutting-edge AI models at your fingertips—right inside your cells. (Un)Perplexed Spready is not your average spreadsheet software. It’s a next-generation tool that integrates state-of-the-art language models into its custom formulas, letting you perform advanced data analysis, generate insights, and even craft intelligent responses, all while you go about your daily work (...or just drink your coffee).

This isn’t sci-fi. This is (Un)Perplexed Spready—the spreadsheet software that laughs at the limits of Excel, Google Sheets, or anything you’ve used before.

“But How Is This Even Possible?”

Meet Your New AI Co-Pilots:

-

PERPLEXITY functions: Tap into commercial-grade AI (Perplexity.ai) for market analysis, real-time data synthesis, or competitive intelligence.

-

ASK_LOCAL formulas: Run free, private AI models (via Ollama) directly on your machine. Mistral, DeepSeek, Llama2—your choice. Crunch data offline, no API fees, no delays.

-

ASK_REMOTE (demo mode): Test ideas on our server-

What Makes (Un)Perplexed Spready Unique?

Direct AI models Integration:

At the heart of (Un)Perplexed Spready is its innovative support for custom functions such as:

-

PERPLEXITY: Call top-tier commercial AI directly (via Perplexity AI’s API)

-

ASK_LOCAL: Query locally installed AI models through the Ollama platform

-

ASK_REMOTE: Access remotely hosted AI model for on-the-fly analysis

With these functions, you can simply enter a formula like:

=PERPLEXITY1(A2, "What is the category of this product? Choose between following categories: meat, fruits, vegetables, bakery, dairy, others.")

or

=ASK_LOCAL1(A2, "From product description extract the product measure (mass, volume, size etc.) and express it in S.I. units")

or

=ASK_REMOTE1(A2, "What is aggregate phase of the product (solid, liquid, gas)?")

or

=ASK_LOCAL2(A2, B2, "Are two products having same color?")

or

=ASK_LOCAL2(A2, B2, "In what kind of packaging container is the product packed? Example of containers are: box, bottle, pouch, can, canister, bag, etc.")

or

=PERPLEXITY2(A2, B2, "What are common characteristics of the two products?")

or

=PERPLEXITY(A2, B2, C2, "How can we achieve Input1 from Input2, by utilizing Input3?")

or

=ASK_LOCAL3(C2, D2, E2,"Classify Input1 and Input2 by Input3.")

— and see powerful AI-generated output appear instantly in your spreadsheet!

Seamless Experience:

(Un)Perplexed Spready is designed with both beginners and advanced users in mind. Its user-friendly interface incorporates familiar spreadsheet features—such as cell formatting, copy/paste, auto-updating row heights, and advanced sorting—as well as innovative AI integration that brings a new level of interactivity. Every calculation, from basic arithmetic to AI-driven natural language queries, is processed swiftly and accurately.

Advanced Business Scenarios

Only your imagination is limit when considering possible use cases. By using Perplexity AI, you can facilitate on-line search capabilities and embed it inside your spreadsheet formula.

Look this advanced formula as an example:

=PERPLEXITY3(A2,C2,D2,"By using A breakdown of the 12 award categories being defined on the web page: https://awards.retailgazette.co.uk/award-categories/ (namely: 'Best Retailer Over £500m', 'Best Retailer Under £500m', 'Own Brand Game Changer', 'Ecommerce Game Changer', 'Marketing Game Changer', 'Sustainability Game Changer', 'Workplace Game Changer', 'Game Changing Team', 'Grocery Game Changer', 'Fashion Game Changer', 'New Store Game Changer', 'Supply Chain Game Changer') label the company provided in the Input1 with with the category (only 1 of the 12 categories) they’re most likely to sponsor, based on the product/service the company offers. In order to determine the company product/service offer, investigate company web page provided as Input2 and Linkedin company page being provided as Input3. Then compare the product/service the company offers and determine the most suitable award category as final result.")

Result is amazing:

Similarly, we can find industry domains for list of companies, by entering following formula:

=PERPLEXITY3(A2,C2,D2,"For the company provided as Input1 determine the product/service offered, by investigating company web page provided as Input2 and Linkedin company page being provided as Input3. If needed, search Internet for additional information to determine exact industry the company operates within. Return the label of industry in which the company operates within, as final result.")

Free and paid features

Basic spreadsheet functionality is completely free, but utilizing advanced AI-driven functions require purchasing and activation of commercial license, for a small fee.

Intelligent Licensing for Premium Features:

Only the premium AI-driven functions require a commercial license. This means while all basic spreadsheet features remain free, access to advanced AI-driven formulas like PERPLEXITY, ASK_LOCAL and ASK_REMOTE require purchasing commercial license.

When you launch (Un)Perplexed Spready, your license status is automatically verified—updating the main interface with clear feedback. For example, if your license is active, you’ll see “License: VALID” and a detailed status report; if not, you’ll be notified immediately that the premium features are locked. But, you will be able to use the program as a regular free spreadsheet software. Once you need advanced AI formulas, then you can purchase a license, for a price of two beers a month.

Once the license is validated, you can start using AI-powered functions in your formulas. Whether you’re analyzing large datasets or crafting creative reports.

Value Proposition and Business Use Cases

You can find more ind-depth analysis of what are the potential use cases and what is the benefit (Un)Perplexed Spready in following articles:

How It Works

Install or Integrate Advanced Artificial Intelligence (AI) LLM models into your spreadsheet

"(Un)Perplexed Spready" is a peculiar spreadsheet software introducing possibility to utilize advanced LLM AI models directly inside custom formulas. Isn't that cool?Imagine that - powerful AI models doing something useful for change, not only entartaining you in chat! We say: yeah, let AI do the hard work, while you drink your coffee and enjoy your life!

Currently, the following AI platforms are available via API:

1. Perplexity AI (https://www.perplexity.ai/) - a well known major commercial AI provider

2. Remotely hosted free Ollama based AI model (hosted on our server)

Currently we run Mistral:7b on our own server.

3. Locally installed free AI models (available by Ollama platform on https://ollama.com/)

Of the three options, most exciting option is the third one, which enables you to install any model available on Ollama platform (https://ollama.com/), run it on your local computer and immediately have it available to use it inside spreadsheets, by utilizing our (Un)Perplexed Spready software!

Isn't that cool? Oh, yes it is! The only limit is your HW. Fortunately, these days, Ollama provides models such as Mistral 7b, DeepScaler:1.5b, DeepSeek-1:7b and LLama2, which can run even on low-spec hardware, such as oldish office laptop. Choose from multitude of available models here: https://ollama.com/search

Find more detailed recommendations for choosing appropriate LLM model for low-spec office laptops here: Leveraging AI on Low-Spec Computers: A Guide to Ollama Models for (Un)Perplexed Spready

Embed Advanced Artificial Intelligence (AI) LLM models into your spreadsheet formulas

(Un)Perplexed Spready provides three corresponding set of formulas for these three AI options, in three variants differing in number of cell ranges input parameters, i.e. number of inputs for AI prompt.

1. Functions working with Perplexity AI

=PERPLEXITY1 (A2, "Some instruction to AI...")

=PERPLEXITY2 (A2, B2, "Some instruction to AI...")

=PERPLEXITY3 (A2, B2, C2, "Some instruction to AI...")

2. Functions working with locally installed AI model (by using Ollama platform)

=ASK_LOCAL1 (A2, "Some instruction to AI...")

=ASK_LOCAL2 (A2, B2, "Some instruction to AI...")

=ASK_LOCAL3 (A2, B2, C2, "Some instruction to AI...")

3. Functions working with remotelly hosted AI model (hosted on our server)

=ASK_REMOTE1 (A2, "Some instruction to AI...")

=ASK_REMOTE2 (A2, B2, "Some instruction to AI...")

=ASK_REMOTE3 (A2, B2, C2, "Some instruction to AI...")

Notice: Option 3 with AI model hosted on our server is at this stage intended only for demo and testing purposes, not for real production exploitation.

The hosting server has low hardware specifications, thus calculation is very slow.

We recommend you to install your own free AI model available on Ollama platform, according to your HW specs. This option will provide you highest flexibility and lowest costs. Of course, if you wish to have enterprise level accuracy of calculation, then choose Perplexity AI for superb speed and high quality of AI-driven answers.

Why Choose (Un)Perplexed Spready?

-

Revolutionary Integration: Get the best of spreadsheets and AI combined, all in one intuitive interface.

-

Market-Leading Flexibility: Choose between Perplexity AI and locally installed LLM models, or even our remote free model hosted on our server—whichever suits your needs.

-

User-Centric Design: With familiar spreadsheet features seamlessly merged with AI capabilities, your productivity is bound to soar.

-

It's powerful - only your imagination and HW specs are limits to what can you do with the help of AI! If data is new gold, then the (Un)Perplexed Spready is your miner!

- It's fun - sure it is fun, when you are drinking coffee and scrolling news, while AI is doing the hard job. Drink your coffee and let the AI works for you!

Get Started!

Join the revolution today. Let (Un)Perplexed Spready free you from manual data crunching and unlock the full potential of AI—right inside your spreadsheet. Whether you're a business analyst, a researcher, or just an enthusiast, our powerful integration will change the way you work with data.

You can find more practical information on how to setup and use the (Un)Perplexed Spready software here: Using (Un)Perplexed Spready

Download

Download the (Un)Perplexed Spready software: Download (Un)Perplexed Spready

Request Free Evaluation Period

When you run the application, you will be presented with the About form, where you will find automatically generated Machine Code for your computer. Send us an email with specifying your machine code and ask for a trial license. We will send you trial license key, that will unlock the premium AI functions for a limited time period.

Contact us on following email:

Sales Contact

Purchase commercial license

For a price of two beers a month, you can have a faithful co-worker, that is, the AI-whispering spreadsheet software, to work the hard job, while you drink your coffee!.

You can purchase the commercial license here: Purchase License for (Un)Perplexed Spready

AI-driven Spreadsheet Processing Services

We are also offering AI-driven spreadsheet processing services with (Un)Perplexed Spready software.

If you need massive data extraction, data categorization, data classification, data annotation or data labeling, check-out our corresponding services here: AI-driven Spreadsheet Processing Services

Further Reading

Download (Un)Perplexed Spready

Purchase License for (Un)Perplexed Spready

Leveraging AI on Low-Spec Computers: A Guide to Ollama Models for (Un)Perplexed Spready

(Un)Perplexed Spready with web search enabled Ollama models

Various Articles about (Un)Perplexed Spready

Page 1 of 4