Introduction

At Matasoft, we believe that technology exists to amplify human creativity, not to burden it with repetitive and tedious tasks. Yet, countless professionals still spend hours manually entering and editing complex spreadsheets filled with unstructured text — a type of data traditional spreadsheet formulas simply cannot process effectively.

Until recently, the only solution was painstaking manual work, row by row. That has changed with the rise of large language models (LLMs), which bring contextual understanding and intelligent automation into the realm of data processing.

To harness this advancement, we developed (Un)Perplexed Spready — software that seamlessly integrates artificial intelligence into spreadsheet formulas. It enables machines to extract, analyze, and categorize messy data effortlessly, allowing users to focus on insights rather than data cleanup.

Our philosophy is simple: let AI do the hard work while you enjoy your coffee.

About (Un)Perplexed Spready

Our (Un)Perplexed Spready application is a next-generation spreadsheet tool that embeds AI prompts directly inside spreadsheet formulas, enabling true intelligent data processing powered by artificial intelligence. You can find detailed information on our official page: https://matasoft.hr/QTrendControl/index.php/un-perplexed-spready.

At present, the software supports two types of AI integrations: Perplexity AI – a highly capable web search–oriented commercial AI, and Ollama – a local platform hosting a variety of open-source large language models you can run directly on your own machine.

While Perplexity AI offers excellent contextual accuracy and real-time web data retrieval, its pricing structure makes it less accessible for small businesses or individual users, as token usage costs can quickly become significant. For this reason, this article focuses on a more affordable alternative: the Ollama-based setup, which provides local AI processing at minimal or zero cost, making advanced spreadsheet automation achievable for everyone.

About Ollama

Ollama is a tool that lets you download and run large language models (LLMs) entirely on your own computer—no cloud account or API key required. You install it once, then pull whichever open-source model you want (Llama 3, Mistral, Gemma, Granite …) and talk to it from the command line or from your own scripts. Think of it as a lightweight, self-contained “Docker for LLMs”: it bundles the model weights, runtime, and a simple API server into one binary so you can spin up a private, local AI assistant with a single command.

You can find more information here: https://ollama.com/

By default, Ollama will expose API endpoint on http://localhost:11434/api/chat

About Ollama Cloud

Recently, Ollama introduced a new cloud-hosted offering featuring some of the largest and most capable open-source AI models. While Ollama’s local setup has always been excellent for privacy and control, running large language models (LLMs) locally requires modern, high-performance hardware — something most standard office computers simply lack. As a result, using (Un)Perplexed Spready with locally installed models for large or complex spreadsheet operations can quickly become impractical due to hardware limitations.

This is where Ollama Cloud steps in. It provides scalable access to powerful open-source models hosted in the cloud, delivering the same flexibility without demanding high-end hardware. The pricing is impressively low — a fraction of what comparable commercial APIs like Perplexity AI typically cost. This makes it an ideal solution for users and organizations who want high-quality AI performance without significant infrastructure investment.

We’re genuinely excited about this development — Ollama Cloud bridges the gap between performance, affordability, and accessibility. Well done, Ollama — and thank you for making advanced AI available to everyone!

You can find more information here: https://ollama.com/cloud

Ollama cloud API endpoint is: https://ollama.com/api/chat

About Ollama Desktop Application

Ollama recently also published a desktop application, which introduces interesting capabilities like integration with web search tool.

Unfortunately, currently it is available only for Windows and Mac, while not available for Linux. We hope this will change in future. We haven't checked, but maybe it is possible to install it in Linux via Wine emulator?

About SearXNG

SearXNG is a free, open-source “meta-search” engine that you can run yourself. Instead of crawling the web on its own, it forwards your query to dozens of public search services (Google, Bing, DuckDuckGo, Mojeek, Qwant etc.), merges the results, strips out trackers and ads, and returns a single clean page. All queries originate from your server/PC, so the upstream engines never see your IP or set cookies on your browser. In short: it’s a privacy-respecting, self-hostable proxy that turns multiple third-party search engines into one, without logging or profiling users.

Unfortunatelly, while On Linux it is quite easy to install it, on Windows it is not so easy, and depending on Windows OS version, it might be impossible.

About Open WebUI

Open WebUI (formerly “Ollama WebUI”) is a browser-based, self-hosted dashboard that gives you a ChatGPT-like interface for any LLM you run locally or remotely.

It speaks the same API that Ollama exposes, so you just point it at `http://localhost:11434` (or any other OpenAI-compatible endpoint) and instantly get a full-featured GUI: threaded chats, markdown, code highlighting, LaTeX, image uploads, document ingestion (RAG), multi-model support, user log-ins, and role-based access.

Think of it as “a private ChatGPT in a Docker container” – you keep your models and data on your own machine, but your users interact through a polished web front-end.

What is interesting is that it enables integration of tools like web search tools and exposing it externally via API endpoint on 'http://localhost:3000/api/chat/completions'.

Thus, instead of using Ollama endpoint 'http://localhost:11434/api/chat' or 'https://ollama.com/api/chat' we will rather use 'http://localhost:3000/api/chat/completions' with integrated SearXNG enabled "web_search" tool. What is great about it is that we can utilize both local Ollama models and Ollama cloud models by using the same Open WebUI API endpoint ('http://localhost:3000/api/chat/completions' ).

Working Solution on Linux

Ollama Setup

Install Ollama by using follwing command in terminal:

curl -fsSL https://ollama.com/install.sh | sh

Search and browse local and cloud models available here: https://ollama.com/search

Download and install local LLM models by using "pull" command, like this:

ollama pull granite4:tiny-h

Register and sign-in to Ollama here: https://ollama.com/cloud in order to be able to use Ollama cloud models. Optionally (and highly recommended), upgrade to PRO subscription.

Register also API key here: https://ollama.com/settings/keys

The you can register cloud models by using commands like this:

ollama pull gpt-oss:20b-cloud

Notice the difference between actually installing local copy of a model from registering cloud variant of the same model.

This registration of a cloud model is needed for running a cloud model via CLI in terminal (for example "ollama run gpt-oss:120b-cloud") or inside Ollama Desktop application.

However, when you are utilizing cloud model via API, then this is not needed, but rather, you will use the model via API endpoint on https://ollama.com/api/chat

Read the API related documents here:

Configure Ollama Service

Go to the folder /etc/systemd/system and open ollama.service file as sudo. Add these 2 lines:

Environment="OLLAMA_HOST=0.0.0.0:11434"

Environment="OLLAMA_ORIGINS=*"

This will enable proper integration of locally installed Ollama with Open WebUI installed in a docker container.

Then activate it:

sudo systemctl daemon-reload

sudo systemctl restart ollama

sudo systemctl status ollama

sudo journalctl -u ollama -f

SearXNG Setup

There are multiple alternative ways how to install and setup SearXNG, but I would recommend approach with Docker installation described here: https://docs.searxng.org/admin/installation-docker.html.

However, the web page is not giving exactly accurate instruction, so we need to modify it a bit.

Create Directories for Configuration and Persistent Data

In terminal type:

mkdir -p ./searxng/config/ ./searxng/data/

cd ./searxng/

Run the Docker Container

Type in terminal:

docker rm -f searxng

docker run --name searxng -d -p 8080:8080 -v "./config/:/etc/searxng/" -v "./data/:/var/cache/searxng/" --add-host=host.docker.internal:host-gateway docker.io/searxng/searxng:latest

Edit SearxNG Configuration for JSON Support

Find the settings.yml configuration file and enter a line to support json format. This is absolutely critical, without that integration with SearXNG will not work! (Somebody please tell SerXNG developer to include JSON support by default!)

In the "search" section under "formats:" subsection, add "-json" line.

After that change you need to restart the SearXNG container:

sudo docker restart searxng

Starting SearXNG

You can start SearXNG with this command in terminal:

docker container start searxng

The SearXNG should be visible on http://localhost:8080/

Verify that JSON format is enabled, by loading the following page in browser: http://localhost:8080/search?q=%3Cquery%3E&format=json

You should see the following:

Starting SearXNG as a Service

In order to ensure automatic starting of SearXNG during OS booting, create a file named "searxng-start.service" inside folder /etc/systemd/system.

Enter the following lines inside the file:

[Unit]

Description=Start SearxNG Docker Container

After=docker.service

Requires=docker.service

[Service]

Type=oneshot

RemainAfterExit=yes

ExecStart=/usr/bin/docker container start searxng

TimeoutStartSec=0

[Install]

WantedBy=multi-user.target

Then activate it:

sudo systemctl daemon-reload

sudo systemctl enable searxng-start.service

sudo systemctl start searxng-start.service

sudo systemctl status searxng-start.service

Open WebUI Setup

Open WebUI is one of those powerful, feature-rich tools that can both impress and infuriate at the same time. It offers an incredible range of capabilities — but finding where each option lives can feel like navigating a labyrinth designed by a mischievous engineer. Before you figure out where everything is, you’ll probably be pulling your hair and questioning your life choices. (Seriously, guys… why like this?!)

But don’t worry — you don’t have to suffer through that chaos alone. To save you the frustration and time, we’ve prepared clear, step-by-step instructions on how to properly set up and configure Open WebUI the right way.

You can install Open WebUI by following instructions given here: https://github.com/open-webui/open-webui

As with SearXNG, there are multiple options how to install Open WebUI, but we recommend installation via Docker, by entering following command into terminal:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

Open WebUI is subject of intensive development, so new releases are very frequent. You can update your installation by following command:

docker run --rm --volume /var/run/docker.sock:/var/run/docker.sock containrrr/watchtower --run-once open-webui

Setting Ollama Endpoint in Open WebUI

You can setup multiple "Ollama API connections" here: http://127.0.0.1:3000/admin/settings/connections

Use "http:host.docker.internal:11434" to point towards locally installed Ollama instance, while "https://ollama.com" to point towards Ollama cloud instance.

IMPORTANT: When setting up Ollama cloud connection, you need to enter you Ollama cloud API key under "Bearer"!

Then go to http://127.0.0.1:3000/admin/settings/models and open "Menage Models" options, in order to fetch models available on each of those Ollama instances:

Notice that here you can fetch models coming from:

A) Local Ollama installation

Note that it is "http//host.docker.internal:11434" that points to locally installed Ollama on your computer, not the "http://127.0.0.1:11434", which would be used if you install Ollama instance inside the same docker container where is OpenWebUI! This is important to understand, use "http://host.docker.internal:114343" if you want to utilize Ollama models being installed in standard Ollama installation on your computer.

From here you can pull all the Ollama models that you have already installed on your computer.

Here you can see and edit details about locally installed models, for example locally installed granite4:tiny-h model:

B) Ollama cloud

By defining 'https://ollama.com' you can access Ollama cloud models.

For example, kimi-k2:1t model available in Ollama cloud:

Setting SearXNG Web Search Tools in Open WebUI

We need to setup separately Web Search tool being integrated in Open WebUI GUI application and web_search tool being utilized via API.

Setting SearXNG Web Search Tool for Open WebUI GUI

Go to http://127.0.0.1:3000/admin/settings/web

Here we need to enable web search and choose searxng search engine.

In the "Searxng Query URL" enter the following address: http://host.docker.internal:8080/search?q=<query>&format=json

(Notice that required format is JSON, that's why we had to enable JSON format in settings.yml configuration file of SearXNG installation)

Setting SearXNG Web Search Tool for Open WebUI API

Go to http://127.0.0.1:3000/workspace/tools

Here we can setup various tools, but we will focus on "web_search" tool only.

You can find and download various Open WebUI tools here: https://openwebui.com/#open-webui-community or here https://openwebui.com/tools

You can find web_search tool utilizing SearXNG here: https://openwebui.com/t/constliakos/web_search

Alternatively, you can download it also from here: https://matasoft.hr/tool-web_search-export-1760708746640.json.7z

You can use Import button to import a tool into your Open WebUI instance:

At then end, you will have it available here: http://127.0.0.1:3000/workspace/tools/edit?id=web_search

Important thing is that you need to configure the SEARXNG_ENGINE_API_BASE_URL with proper address, by setting default="http://host.docker.internal:8080/search" and not default="http://localhost:8080/search"!

class Tools:

class Valves(BaseModel):

SEARXNG_ENGINE_API_BASE_URL: str = Field(

default="http://host.docker.internal:8080/search",

description="The base URL for Search Engine",

Exposing Open WebUI API Endpoint

In order to be able to use Ollama models (either locally installed, either coming from Ollama cloud) with connected SearXNG web search tool, we need to setup and expose Open WebUI API endpoint.

Go to http://127.0.0.1:3000/admin/settings/general.

Ensure that "Enable API key" option is enabled.

Now go to user settings (not Admin panel settings, but regular user settings).

Here you need to generate your Open WebUI API key, this is the API key you are going to use in (Un)Perplexed Spready!

Now your Open Web UI instance is configured to run with SearXNG web search tool.

Endpoints Setup in (Un)Perplexed Spready

You can find detailed instructions on how to setup and use use (Un)Perplexed Spready here: https://matasoft.hr/qtrendcontrol/index.php/un-perplexed-spready/using-un-perplexed-spready

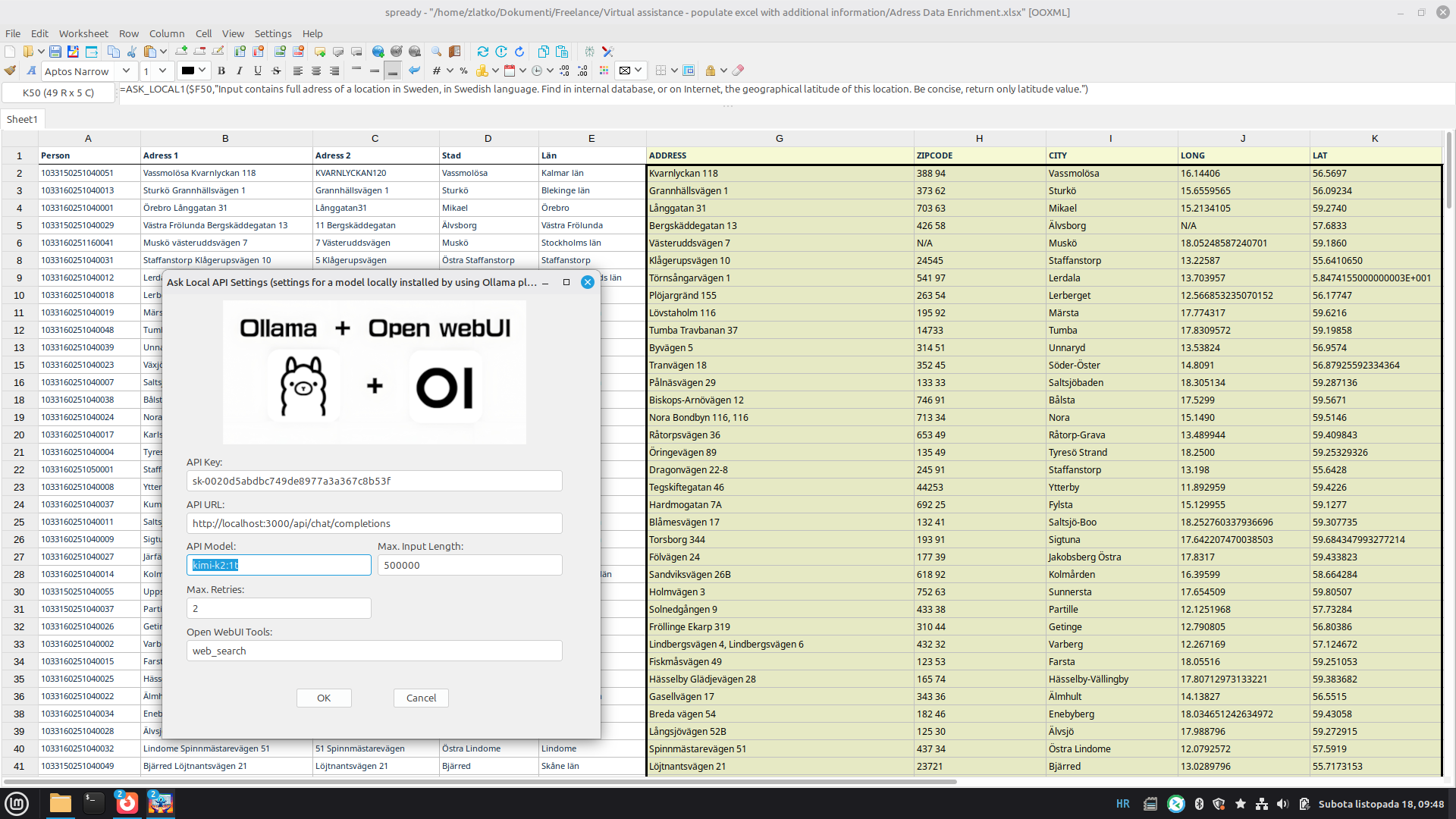

Go to Settings and choose "Local AI API Settings".

Also available in the toolbar:

We need to enter the Open WebUI API key under the API key, Open WebUI API URL ('http://localhost:3000/api/chat/completions') under the API URL and "web_search" under the Open WebUI Tools.

That's it! Now we can use both locally installed Ollama models and Ollama cloud models, by using the very same API endpoint. Cool, isn't it?

NOTICE: When you are entering model name under "API Model", you need to use model name as shown in your Open WebUI GUI where you registered it. Therfore, e.g. "kimi-k2:1t" and not "kimi-k2:1t-cloud", although you might think that proper name should have "-cloud" suffix, as indicated in Ollama web page. No! You need to use without "-cloud" suffix, exactly as you see it in the Open WebUI GUI.

Working Solution on Windows

While in Linux we had setup Open WebUI as an additional layer where we connected together Searxng based web_search tool with Ollama models, in Windows we have available more straight-forwards approach by using Ollama Desktop application. It seems that Ollama Desktop in Windows has already embedded a web search tool, and it is possible to expose it to external world via API, directly.

The major difference here is that we will not use Open WebUI endpoint (http://localhost:3000/api/chat/completions) neither Ollama Cloud endpoint (https://ollama.com/api/chat) but rather local Ollama endpoint: http://localhost:11434/api/chat.

Also, contrary to the approach we described for Linux, where we used model names without "-cloud" suffix, here we must use exactly those locally registered names of cloud models, so e.g. "gpt-oss:120b-cloud" instead of "gpt-oss:120b"!

First step is to locally register models, in command prompt. For example: ollama pull kimi-k2:1t-cloud, so that we can use it in the Ollama desktop application.

Then in the Ollama Desktop application, under Settings, expose Ollama to the network.

In the (Un)Perplexed Spready you need to enter you Ollama Cloud API key under "API Key", local Ollama API endpoint (http://localhost:11434/api/chat), locally registered name representing a cloud model (e.g. kimi-k2:1t-cloud) and no Open WebUI tool (keep it blank).

UPDATE: Later we have tested without entering Ollama Cloud API key, and it seems it is working even without entering it. If localhost installation API is used, it seems that both Ollama Cloud models and web search tool are enabled via integration through Ollama Desktop application.

SIDE NOTE: Here in screenshot you can see an interesting use case for (Un)Perplexed Spready, where we had to compile answers to some legal questions in Indian state Gujarat. The formula was:

=ASK_LOCAL3($Instructions.$A$1,B2,C2," Your job is to answer the legal query given in the Input3, in category given in Input2, using instructions provided in Input1. Before giving me an output of the answer, I want you to verify the correctness of your answer, cross check the answer for any discrepancies with any government or court orders and check the instructions written here, to make sure your answer is as per the requirements. Remove any reference to citations from the result text, before returning the result. Return now answer to the question given in Input3!")

As you can see, we had to reference a cell in other sheet where we put details of the legal regulations and context for the questions.

Demonstration

We will now demonstrate how it works, with a simple project where we have a spreadsheet containing around 300 addresses in Swedish language. The task is to extract address line and city, and enrich it by finding city ZIP code and geographical longitude and latitude. For sake of this experiment, we will process only first 30 rows and compare various Ollama cloud models with a locally installed Ollama model and finally with a Perplexity AI model. We ere running this test on a Dell Latitude 5410 laptop.

We will first create new column (column F) containing concatenated information =$B$1&": "&B2&", "&$C$1&": "&C2&", "&$D$1&": "&D2&", "&$E$1&": "&E2

Then we will define AI-driven formulas (ASK_LOCAL1 formula) for columns to be populated with additional information:

ADDRESS: =ask_local1($F2, "Input contains full adress of a location in Sweden, in Swedish language. Extract Street Address and House Number ONLY.")

ZIPCODE: =ask_local1($F2, "Input contains full adress of a location in Sweden, in Swedish language. Extract or find on Internet ZIP code (postal code). Be concise, return postal code value only.")

CITY: =ask_local1($F2, "Input contains full adress of a location in Sweden, in Swedish language. Extract city name.")

LONG: =ask_local1($F2, "Input contains full adress of a location in Sweden, in Swedish language. Find in internal database, or on Internet, the geographical longitude of this location. Be concise, return only longitude value.")

LAT: =ask_local1($F2, "Input contains full adress of a location in Sweden, in Swedish language. Find in internal database, or on Internet, the geographical latitude of this location. Be concise, return only latitude value.")

Now we can load and execute it in (Un)Perplexed Spready. Let's load it and hide column F. We will execute calculation for first 30 rows and compare results for Ollama cloud models with one locally installed Ollama model (we will use Smollm3). We have chosen smollm3 because it is a model that can run relatively fast on weak HW, while still achieving relatively good results. Bigger models, of course, would run even longer. The intent is not to give an extensive comparison, but rather to give you a glimpse on what is the difference between running Ollama cloud models versus locally installed models.

A) Ollama Cloud Model - kimi-k2:1t

With kimi-k2:1t model, It took 10 minutes 16 seconds to finish the job for 30 rows (30x5=150 formulas). This model is considered by many to be the most capable general purpose open-source model at the moment. You can find more info here: https://ollama.com/library/kimi-k2

B) Ollama Cloud Model - gpt-oss:120b

With gpt-oss:120b, It took 13 minutes 13 seconds to finish the job for 30 rows (30x5=150 formulas). You can find more information about this model here: https://ollama.com/library/gpt-oss

C) Ollama Cloud Model - gpt-oss:20b

With smaller variant of gpt-oss, It took 13 minutes 32 seconds to finish the job for 30 rows (30x5=150 formulas). More information about the model here: https://ollama.com/library/gpt-oss

D) Local Ollama Model - alibayram/smollm3:latest

Now, we were running the same test with a locally installed Ollama model. We have chosen smollm3 due it's compromise of small size ( therefore fast execution) and pretty good agentic capabilities. It took 3 hours 14 minutes and 55 seconds to finish the job for 30 rows (30x5=150 formulas). It means that on this particular laptop, 390 seconds (6.5 minutes) is required to calculate one row, 32.5 hours would be required for 300 rows, while 1354 days would be required for 300 K rows spreadsheet (!).

You can find more information about this model here: https://ollama.com/alibayram/smollm3

E) Perplexity AI Model - sonar-pro

Finally, let's compare it with A Perplexity AI model. We are going to use Peprlexity AI's sonar-pro model.

With perplexity AI, It took only 6 minutes 44 seconds to finish the job, for 30 rows (30x5=150 formulas). It is obviously the fastest option, but, with a price - during this testing alone, we spent 1.88 $ on tokens, meaning the price was 0.062667 $ per row. Therefore, it would be 18.80$ for 300 rows, 188$ for 3000 rows and 18800$ for 300 K rows! Consider that, almost 19 K $ for a spreadsheet with 300 K rows to process!

Conclusion

While Perplexity AI remains the most capable commercial-grade solution for AI-powered spreadsheet processing in (Un)Perplexed Spready, its usage costs can quickly add up, making it a less practical option for smaller teams or individual users.

Locally installed Ollama models provide an excellent alternative for enthusiasts and professionals equipped with powerful hardware capable of running large language models. However, for extensive or complex spreadsheet operations, performance limitations and hardware demands often become a concern.

The recently launched Ollama Cloud elegantly bridges this gap. It offers access to some of the most advanced open-source models, hosted on high-performance cloud infrastructure — at a fraction of the cost of commercial APIs like Perplexity AI. With its strong performance, flexibility, and modest pricing, Ollama Cloud emerges as the most balanced and affordable choice for AI-driven spreadsheet automation within (Un)Perplexed Spready.

We confidently recommend it to all users seeking the perfect mix of power, convenience, and cost efficiency.

Recommendations to Ollama

If somebody from Ollama reads this article, this is what we would like to see present in Ollama cloud offer in future:

- Server side enabled tools integration, primarily web search tool

- Smaller, but capable models being supported. We are considering models like: IBM's granite3.3:8b, granite4:tiny-h, granite4:small-h; smollm3 (thinking/non thinking); mistral:7b, olmo2; jan-nano-4b etc. Fast execution is important for usage inside spreadsheets

- Uncensored / abliterated variants of LLM models, capable to perform uncensored web scraping

Further Reading

Introduction to (Un)Perplexed Spready

Download (Un)Perplexed Spready

Try (Un)Perplexed Spready in Your Browser

Purchase License for (Un)Perplexed Spready

Various Articles about (Un)Perplexed Spready

AI-driven Spreadsheet Processing Services

Contact

Be free to contact us with your business case and ask for our free analysis and consultancy, prior ordering our fuzzy data matching services.